Have you ever found that you really want an extremely tiny and simple Genetic Algorithm method for optimizing a set of doubles (the genotype) given a fitness function? Me too!!! So, I wrote one, because it seemed fun.

I’m using it to evolve two toy problems, later I hope to show it with a slightly less toyish problem.

It’s really small (224 lines of code in a single file). It would be very easy to make it smaller – but then it’d be less readable. It would be equally simple to add new functionality, but then it would be larger and would no longer qualify for the name PicoGA. You can find PicoGA here http://picoga.codeplex.com/

What can you use a GA for?

First, two toy problems;

Find three doubles that sum up to five!

Not very difficult, but as I said, it’s a toy problem. First we must specify;

- a fitness function

- a population size (how many individuals (I just picked 20 for this simple problem))

- a genome size / number of genes (3).

That comes out as one line of code;

GA ga = new GA(

20, // Individuals

3, // Genotype length (number of doubles)

individual => // Fitness function

Math.Abs(5 - individual.Genotype.Sum(val => val))

);

Simple! Now we need to run that for a couple of generations – but PicoGA takes care of that also. For each generation, we publish the results, if they’ve improved since the previous generation;

private static void SumTo5()

{

Console.WriteLine("Sum to 5:...");

GA ga = new GA(

20, // Individuals

3, // Genotype length (number of doubles)

individual => // Fitness function

Math.Abs(5 - individual.Genotype.Sum(val => val))

);

ga.RunEpoch(

500, // Number of generations to run for

null, // Action to perform for each generation

() => // Action to perform once fitness has improved

{

Console.WriteLine(

"Gen {2}: Fit={1}, Genotype={0}",

string.Join(

" ",

ga.BestIndividual.Genotype.Select(val => val.ToString("0.00"))),

ga.BestIndividual.Fitness.ToString("0.00"),

ga.CurrentEpochGeneration);

});

Console.WriteLine("Sum to 5: done!");

Console.WriteLine("");

}

Voilá, that’s all there is to it! For my test run, at generation 45 a solution is found;

PicoGA, demos

Sum to 5:…

Gen 0: Fit=3,39, Genotype=0,84 0,50 0,26

Gen 1: Fit=3,26, Genotype=0,97 0,50 0,26

…

Gen 43: Fit=0,04, Genotype=2,45 1,38 1,13

Gen 44: Fit=0,01, Genotype=2,48 1,38 1,13

Gen 45: Fit=0,00, Genotype=2,49 1,38 1,13

Sum to 5: done!

Find 1 2 3 4 5

For my second toy problem, I want the GA to find the values 1 2 3 4 5 (in that exact order) for it’s five genes. Again, not a very useful thing to do, possibly even less useful than my first example, but it’s a toy problem;

private static void Find12345()

{

Console.WriteLine("Find 1 2 3 4 5:...");

GA ga = new GA(

200, // Number of individuals

5, // Number of genes in the genotype

individual => // Fitness function

Math.Abs(individual.Genotype[0] - 1) +

Math.Abs(individual.Genotype[1] - 2) +

Math.Abs(individual.Genotype[2] - 3) +

Math.Abs(individual.Genotype[3] - 4) +

Math.Abs(individual.Genotype[4] - 5));

ga.RunEpoch(500, null, () =>

{

Console.WriteLine(

"Gen {2}: Fit={1}, Genotype={0}",

string.Join(

" ",

ga.BestIndividual.Genotype.Select(

val => val.ToString("0.00"))),

ga.BestIndividual.Fitness.ToString("0.00"),

ga.CurrentEpochGeneration);

});

Console.WriteLine("Find 1 2 3 4 5: done!");

Console.WriteLine("");

}

At generation 105 a perfect solution is found. The reason it takes so long is that genes only mutated slowly and reaching 5 took a while. I’ve since upped mutation rate.

Gen 105: Fit=0,00, Genotype=1,00 2,00 3,00 4,00 5,00

Ok, but what can I really use it for? No more toy problems!

Well, lots and lots of stuff!

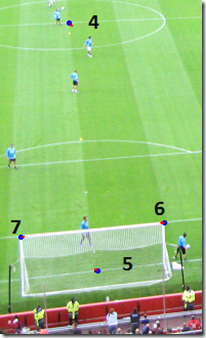

I once had an image of a soccer field and I needed a 3D perspective projection matrix that matched the image. I needed to render football players as they were moving around the pitch, for which I had pitch coordinates. But I needed to convert those pitch coordinates to screen coordinates, which isn’t as simple as it sounds.

The 3d Perspective Projection Matrix contains all information about the setup of the camera and target as the image was shot. Camera Field Of View, position of camera relative to subject, orientation of camera with regard to subject etc. For this I used a genotype of 18 genes (in effect 18 doubles).

The data we need

The pitch looked something like this;

First I measure points on the I could pinpoint pixels in the image and determine where they where in soccer field space and tie that to image space coordinates.

I compiled a list like this (a soccer pitch is 105 x 68 meters)

- Pixel 165,178=> World 0, 0, 0

- Pixel 377,120=> World 52.5, 0, 0

- Pixel 513, 85=> World 105, 0, 0

- Pixel 473, 157=>World 52.5, 34, 0

- Pixel 611, 208 =>World 52.5, 68, 0

- Pixel 735, 136 => World 105, 68, 0

There are analytical methods of retrieving the matrix given only that information, but I don’t know them and I couldn’t find them by googling. But I know GA, and using GA I evolved the matrix!

The fitness function

The fitness function is basically that we feed the six world coordinates into the 3d transformation matrix and sum up the errors of the pixel coordinates it suggests as compared to the pixel coordinates we previously measured. Trivial. Not really, because the formula for transforming from 3D space to 2D screen space isn’t trivial. This is the code I wound up using for this;

private static Point3D ProjectCase(Point3D point, List<double> l)

{

Point3D p = new Point3D(

point.X - l[12] * 100,

point.Y - l[13] * 100,

point.Z - l[14] * 100);

Point3D result = new Point3D(

l[0] * p.X + l[1] * p.Y + l[2] * p.Z + l[3],

l[4] * p.X + l[5] * p.Y + l[6] * p.Z + l[7],

l[8] * p.X + l[9] * p.Y + l[10] * p.Z + l[11]);

if (result.Z != 0)

{

result.X *= l[15] / result.Z;

result.Y *= l[15] / result.Z;

}

result.X += l[16] * 100;

result.Y += l[17] * 100;

return result;

}

Some of the times, PicoGA quickly (in a matter of minutes) finds a matrix that it likes. I’ve plotted in the pixels that the generated matrix produces in the picture below. They’re not perfect, but neither are the measurements that were used for generating the matrix, so they can never be perfect. The red dots are where the evolved matrix suggested;

Here’s the program for evolving the matrix;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Windows.Media.Media3D;

namespace PicoGA

{

public static class FindMatrix

{

internal class WorldToScreenCase

{

public WorldToScreenCase(double screenX, double screenY, double worldX, double worldY)

{

Screen = new Point3D(screenX, screenY, 0);

World = new Point3D(worldX, worldY, 0);

}

internal Point3D Screen { get; set; }

internal Point3D World { get; set; }

}

public static void FindProjectionMatrix()

{

Console.WriteLine("Find ProjectionMatrix:...");

List<WorldToScreenCase> cases =

new List<WorldToScreenCase>

{

// Old Image

new WorldToScreenCase(165,178 , 0, 0),

new WorldToScreenCase(377,120 , 52.5, 0),

new WorldToScreenCase(513,85 , 105, 0),

new WorldToScreenCase(473,157 , 52.5, 34),

new WorldToScreenCase(611,208 , 52.5, 68),

new WorldToScreenCase(735,136 , 105, 68),

};

GA ga = new GA(

2000, // Number of individuals

18, // Number of genes in the genotype

individual => // Fitness function

{

double errorSum = 0;

foreach (WorldToScreenCase test in cases)

{

Point3D testScreen = ProjectCase(test.World, individual.Genotype);

double sqrError = (testScreen - test.Screen).LengthSquared;

errorSum += sqrError;

}

return errorSum;

});

ga.RunEpoch(10000,

() =>

{

ga.BreakEpochRun = ga.BestIndividual.Fitness <= 1.0 || Console.KeyAvailable;

},

() =>

{

Console.WriteLine(

"Gen {2}: Fit={1}, Genotype={0}",

string.Join(

" ",

ga.BestIndividual.Genotype.Take(5).Select(

val => val.ToString("0.00"))),

ga.BestIndividual.Fitness.ToString("0.00"),

ga.CurrentEpochGeneration);

});

if (Console.KeyAvailable)

{

Console.ReadKey();

}

Console.WriteLine("Results for training set:");

foreach (WorldToScreenCase test in cases)

{

ShowTestResult(ga, test);

}

Console.WriteLine("");

Console.WriteLine("Additional tests:");

ShowTestResult(ga, new WorldToScreenCase(120, 73, 105, 34));

Console.WriteLine("Find ProjectionMatrix: done!");

Console.WriteLine("");

}

private static void ShowTestResult(GA ga, WorldToScreenCase test)

{

Point3D p = ProjectCase(test.World, ga.BestIndividual.Genotype);

Console.WriteLine(

" World ({0:0.00}, {1:0.00}) => \n" +

" Wanted:({2:0.00}, {3:0.00}) \n" +

" Received:({4:0.00}, {5:0.00})",

test.World.X,

test.World.Y,

test.Screen.X,

test.Screen.Y,

p.X,

p.Y);

}

private static Point3D ProjectCase(Point3D point, List<double> l)

{

Point3D p = new Point3D(

point.X - l[12] * 100,

point.Y - l[13] * 100,

point.Z - l[14] * 100);

Point3D result = new Point3D(

l[0] * p.X + l[1] * p.Y + l[2] * p.Z + l[3],

l[4] * p.X + l[5] * p.Y + l[6] * p.Z + l[7],

l[8] * p.X + l[9] * p.Y + l[10] * p.Z + l[11]);

if (result.Z != 0)

{

result.X *= l[15] / result.Z;

result.Y *= l[15] / result.Z;

}

result.X += l[16] * 100;

result.Y += l[17] * 100;

return result;

}

}

}

You can find PicoGA here http://picoga.codeplex.com/.